So over the past few weeks or so the internet went crazy over Google’s brilliant artificial intelligence research, called “DeepDream”.

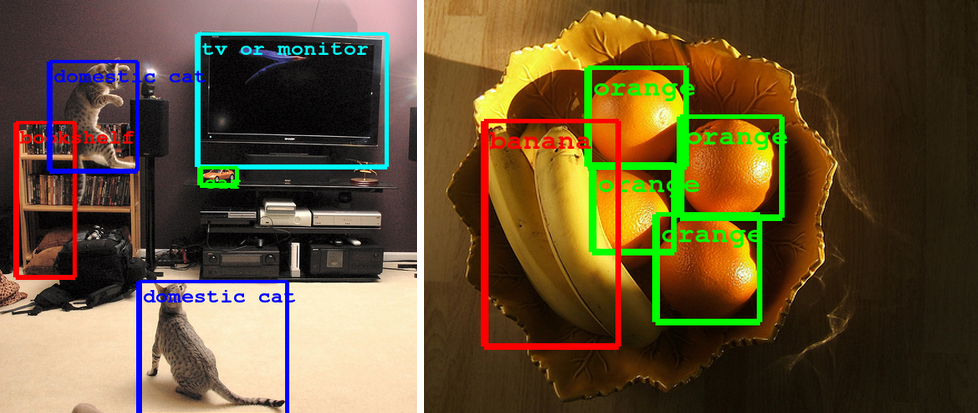

DeepDream is a research on artificial neural networks by Google primarily focused on computer vision and image classification.

Artificial neural networks are a very prominent and upcoming field in machine learning. These learning models are based on the biological neural network, consisting of various layers of “neurons” which process and input by forming links between multiple neurons and piecing bits of information together. The stronger these links are, the more detailed the output is.

DeepDream specifically focuses on interpreting visual information, identifying patterns and objects in the images that are fed through the network of neurons. These neurons have been fed millions of bytes of visual information in order for it to understand the fundamentals of certain objects, allowing it to detect patterns and identify objects in images.

Source: Google Research Blog

Now here comes the cool part.

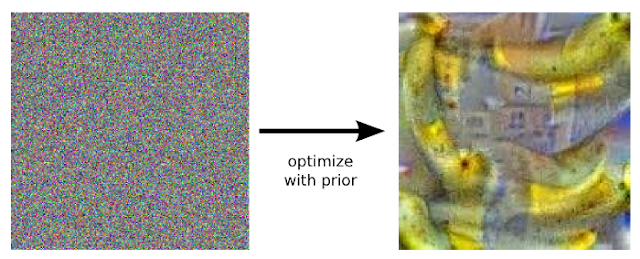

When these neural networks are fed images, they detect existing objects based on what it can identify as an orange or a banana. So essentially these machine brains have a preconceived idea of what a certain object would look like. As such, when they are fed empty noise and told to search for bananas, they output this:

Source: Google Research Blog

Wait what???! Did a machine just create these bananas by itself???!

Yes and no. The neural network basically digged up every image of a banana in its database and tried to find similarities between bananas and the noise that it was fed. And when it tried hard enough, it found a couple of bananas in an image that we perceive as pointless noise.

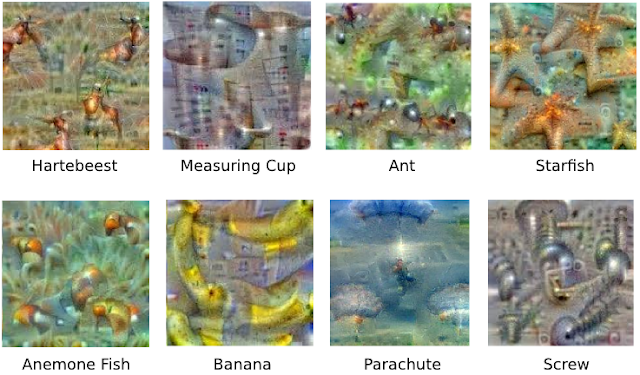

Fascinating, ain’t it? Here’s a couple more:

Source: Google Research Blog

Google calls this “Inceptionism”, similar to the idea of the Nolan film, where a thought planted in your mind grows to become a reality. Similarly these artificial mirrors of the biological brain have some bias towards the images that they percieve, based on the keyword that is fed into the system, the “planted thought”.

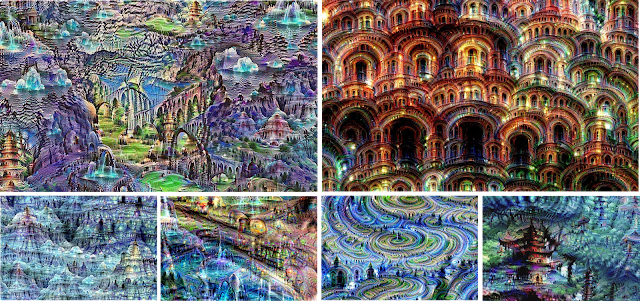

And it doesn’t end there. When these dream images are fed back again and again into the neural network to be processed recursively, magic happens.

Source: Google Research Blog

SAY WHUTTTT????!?!?!??

These magnificent and wondrous dreamy landscapes come out of nothing more than a bunch of circuits and wires. Machines fed meaningless noise are able to churn out complex architectural and natural marvels, simply through neural connections. Wow. Simply mind blowing…

And it makes you wonder, what reality is really made of? If a bunch of 20-30 artificial neurons are able to produce dreamy landscapes and virtual realities, then what about the 100 billion neurons in the average human brain? If a keyword can visualise noise for a machine, then don’t the numerous external stimuli play a significant role in what we percieve the world to be?

It is rather exciting to see the direction in which the research in the field of artificial intelligence is headed. Through studying neural networks and how they work, maybe we could move one step closer to breaking the code behind how the human mind works and how we perceive the vast universe around us.

Maybe if I can I’ll try play around with the source code for DeepDream, and will post the results here if I get something cool. To end off this fascinating post, here’s a trippy video of how the DeepDream processes images and sees patterns.

Till next time! Keep glitching!